Customer cloud infrastructure for the world's top AI companies

Enable enterprises to run AI agents, fine-tune pipelines, and inference self-hosted models in their own cloud infrastructure - keeping sensitive data secure while AI vendors maintain operational control.

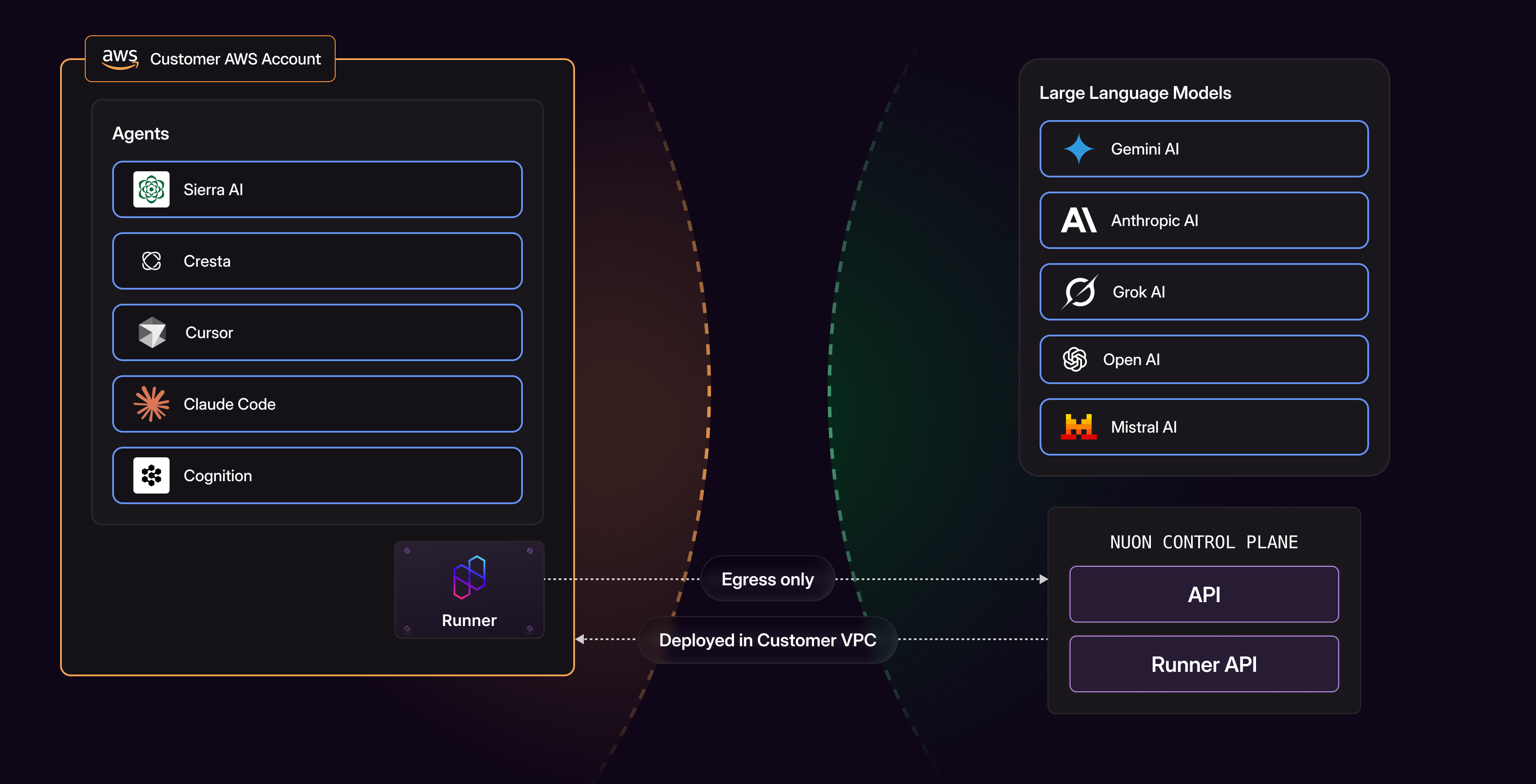

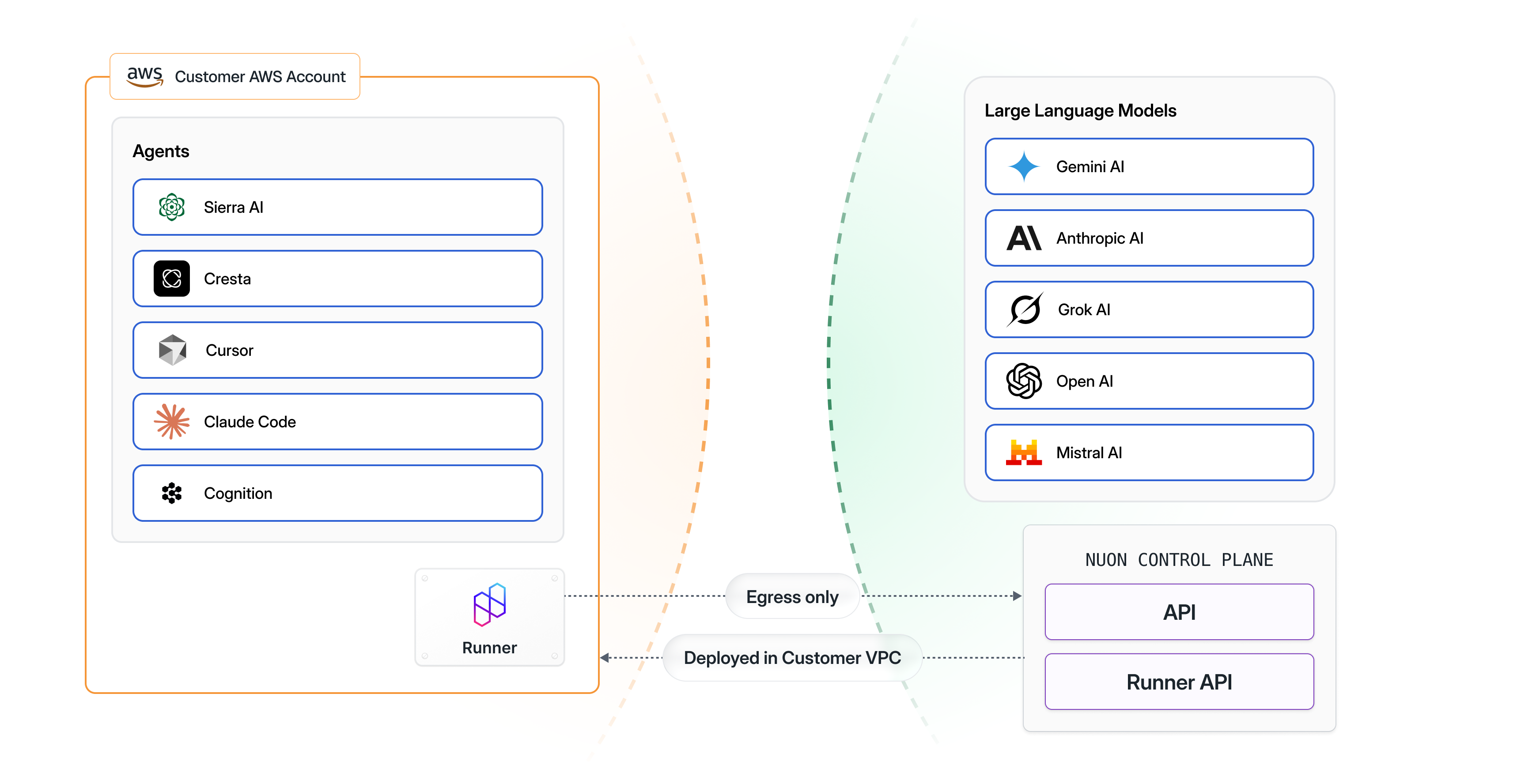

Agents

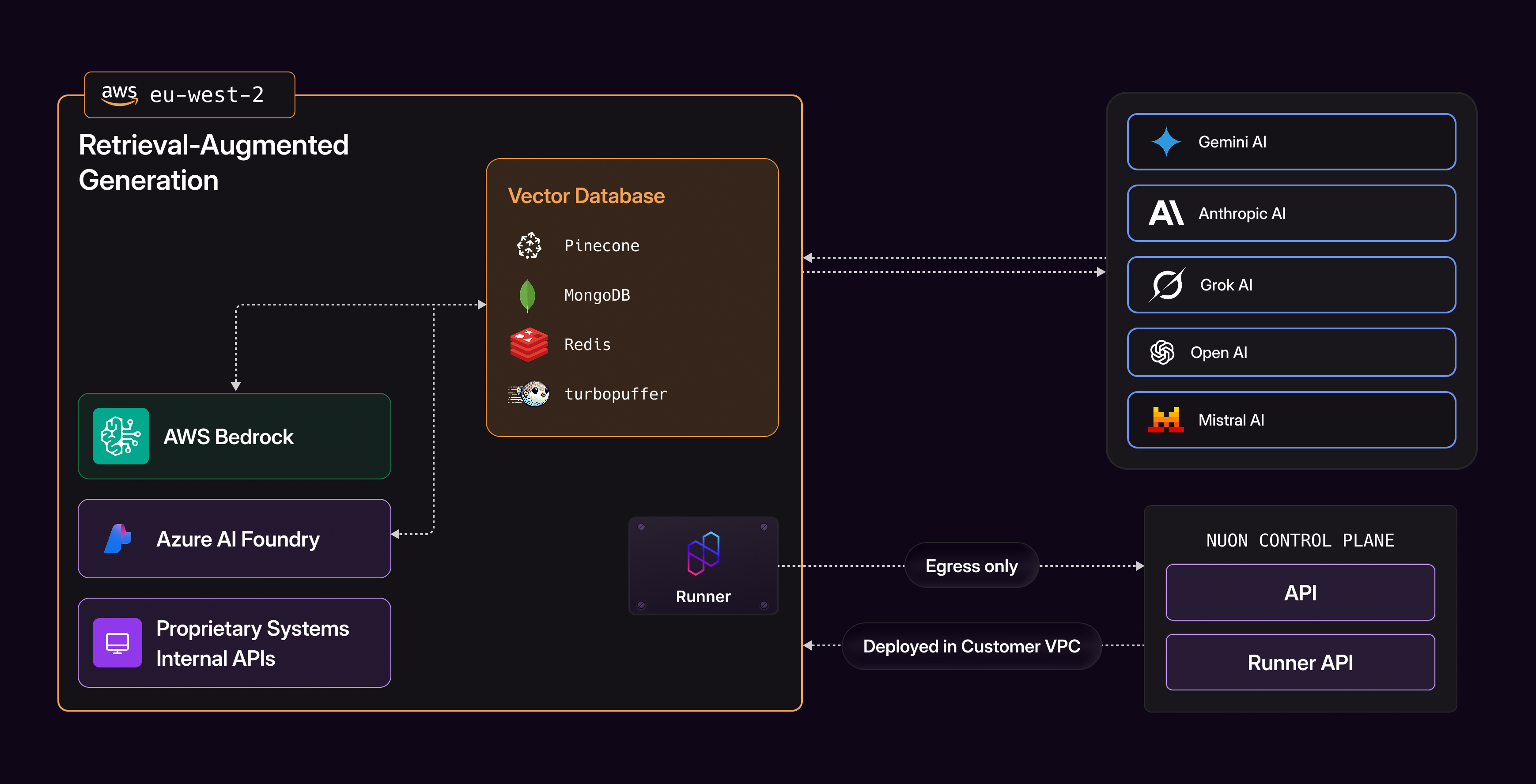

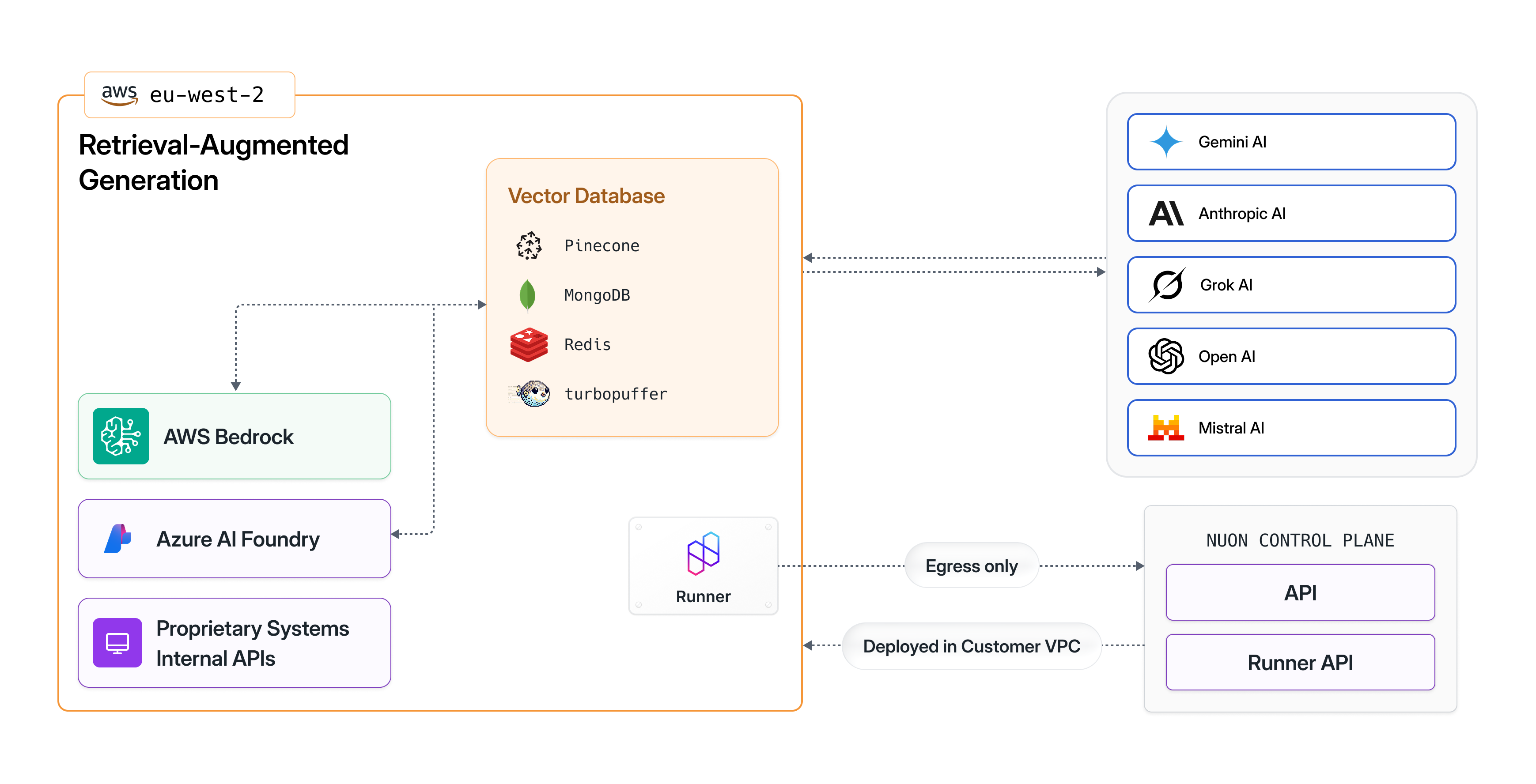

Integrate Agents and Vector Databases

Operate Vector Databases in Customer Cloud Accounts

Keep sensitive data in customer environments while AI apps connect to proprietary models, vector databases, and internal APIs. Process, cache, and transform data locally before external API calls.

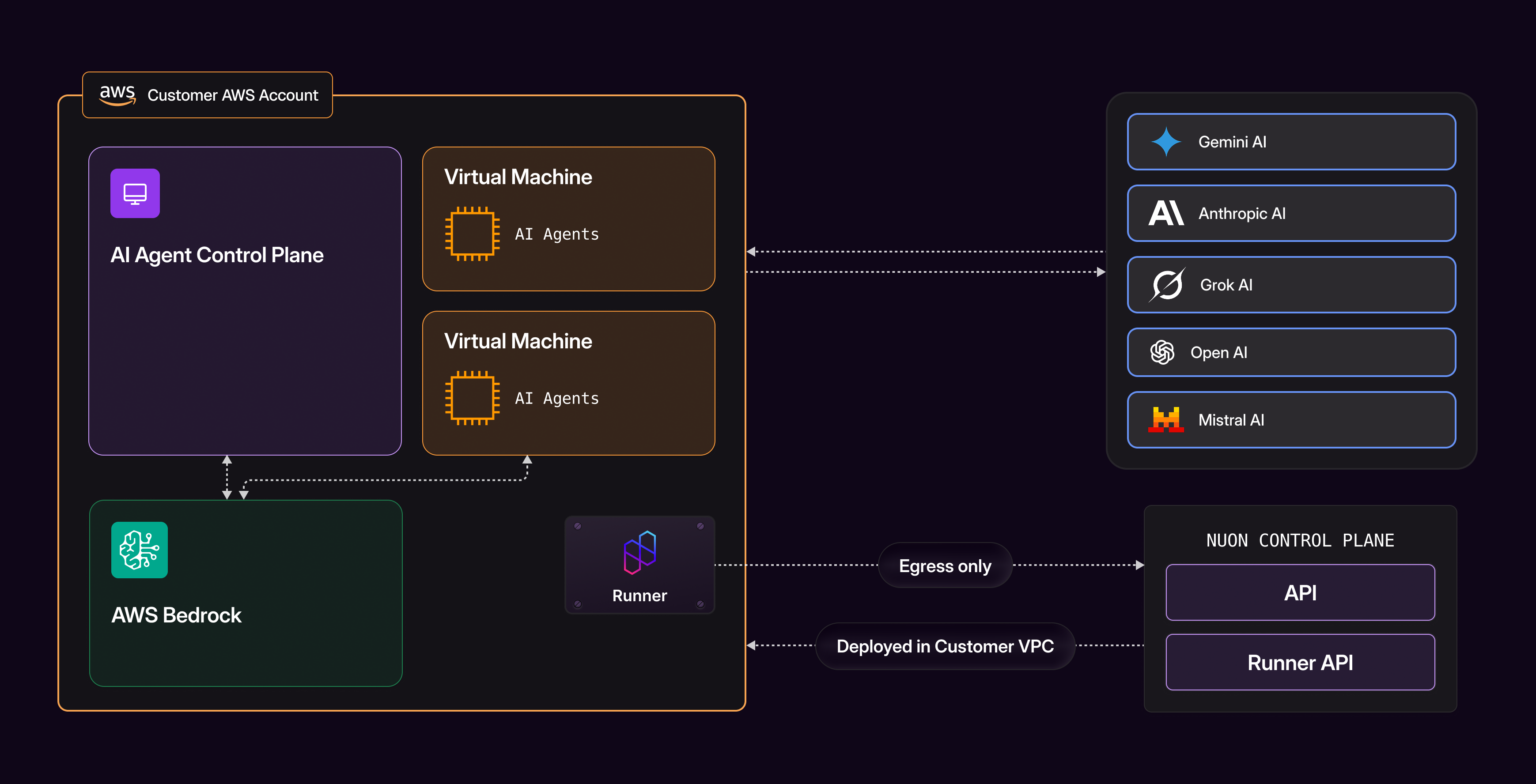

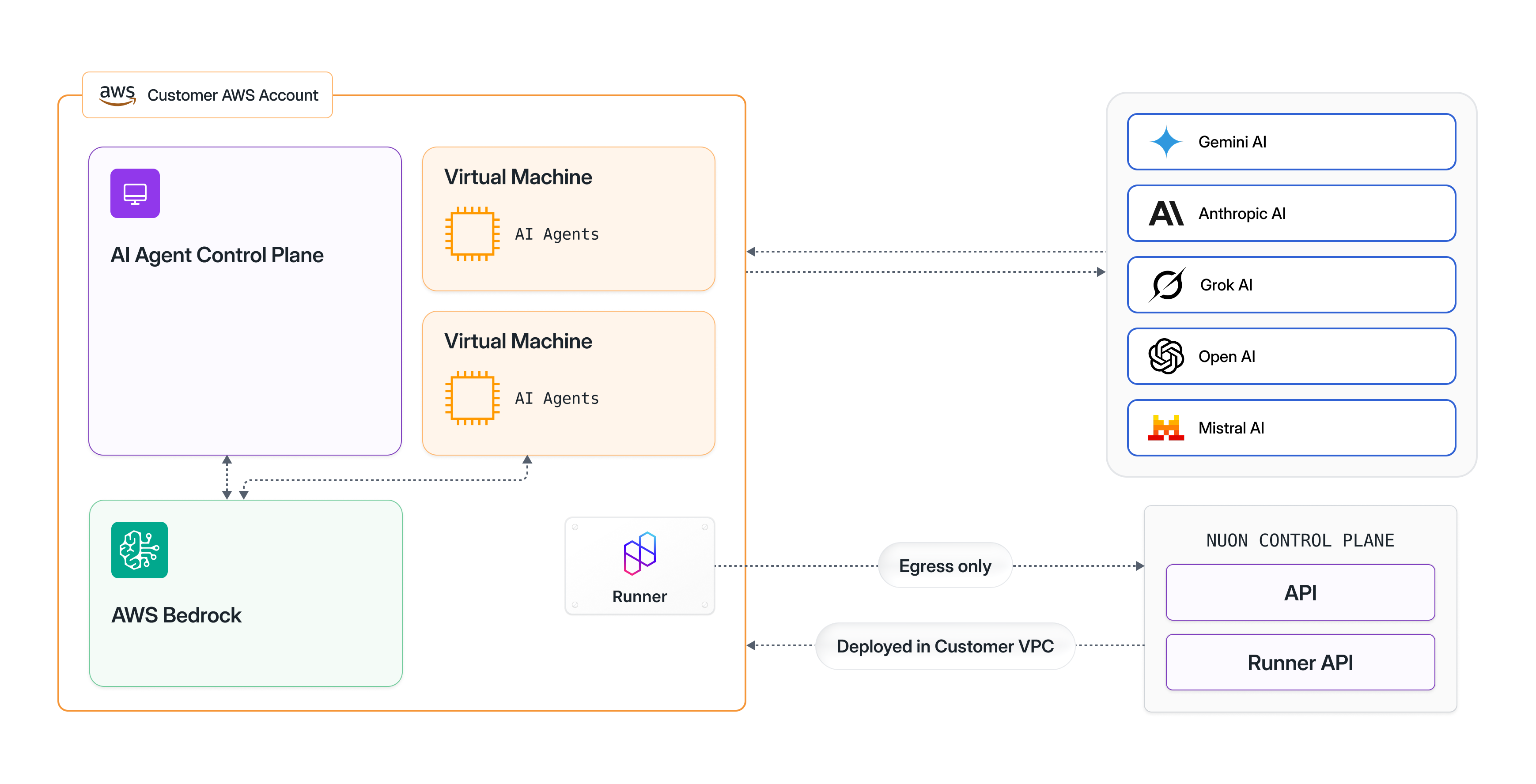

Agent Execution Environments

Run autonomous AI agents securely inside customer infrastructure. Host the orchestration layer that decides actions, manages workflow state, and executes tools while LLM calls remain external.

Models

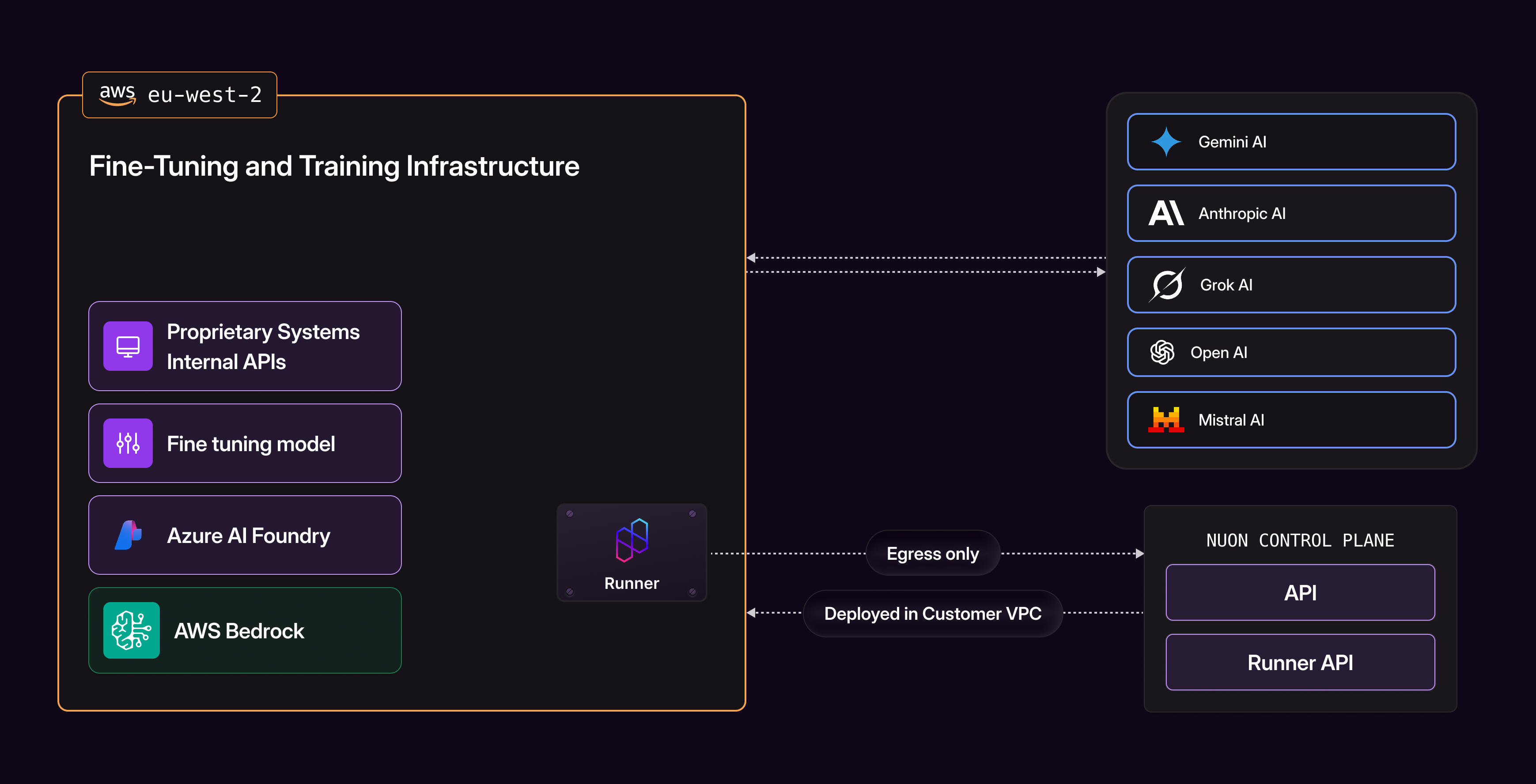

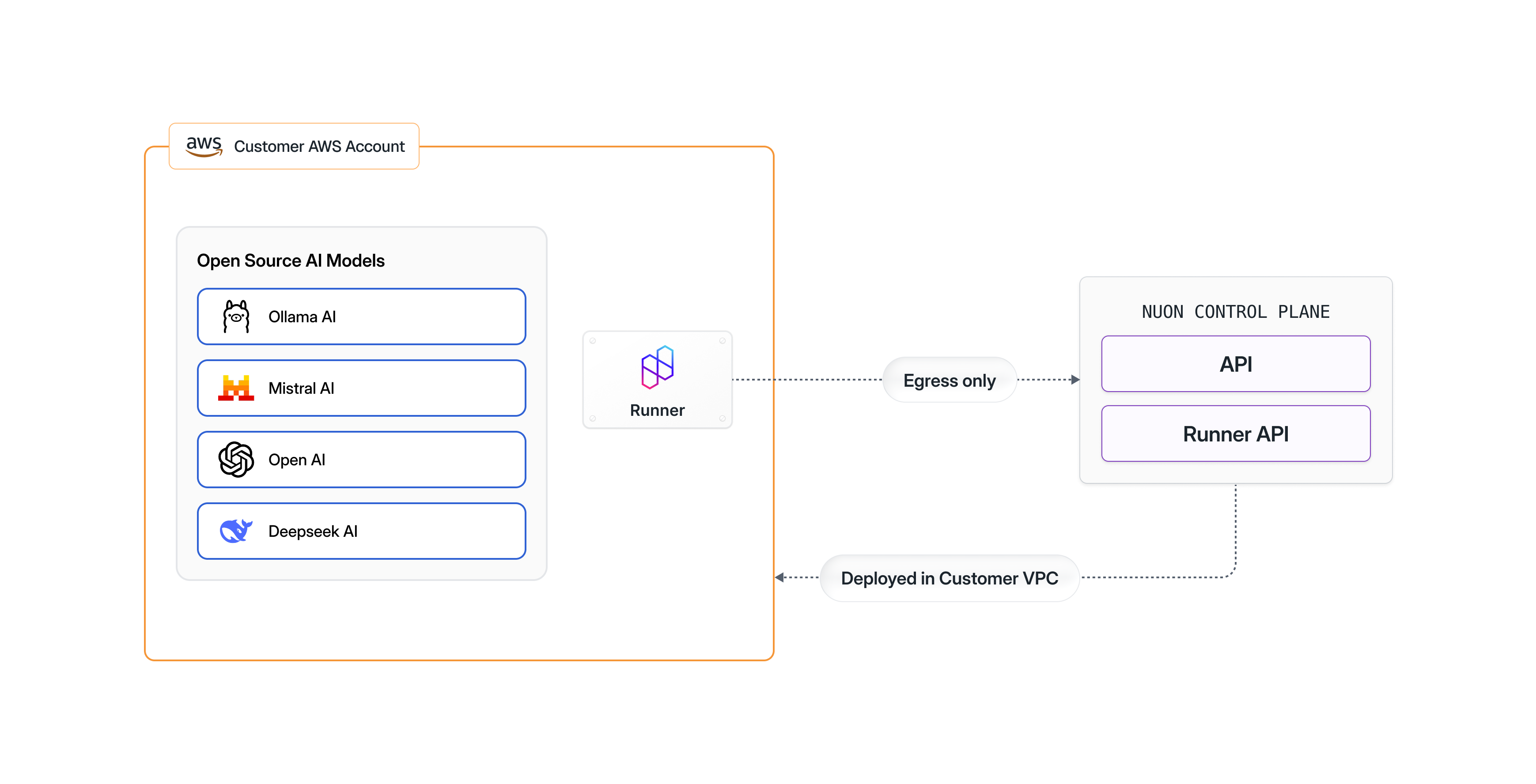

Open-Source Models and Fine-Tuning Models

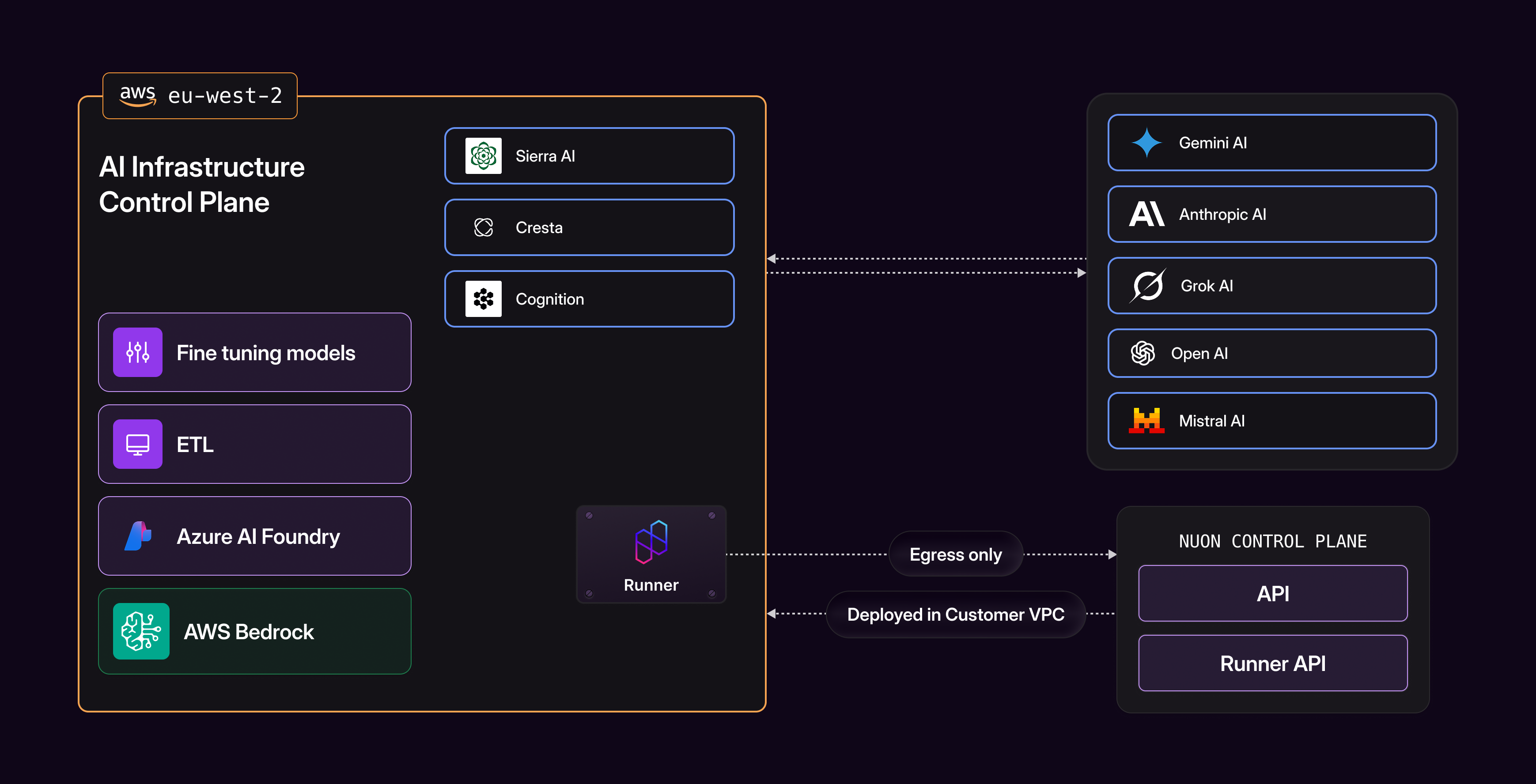

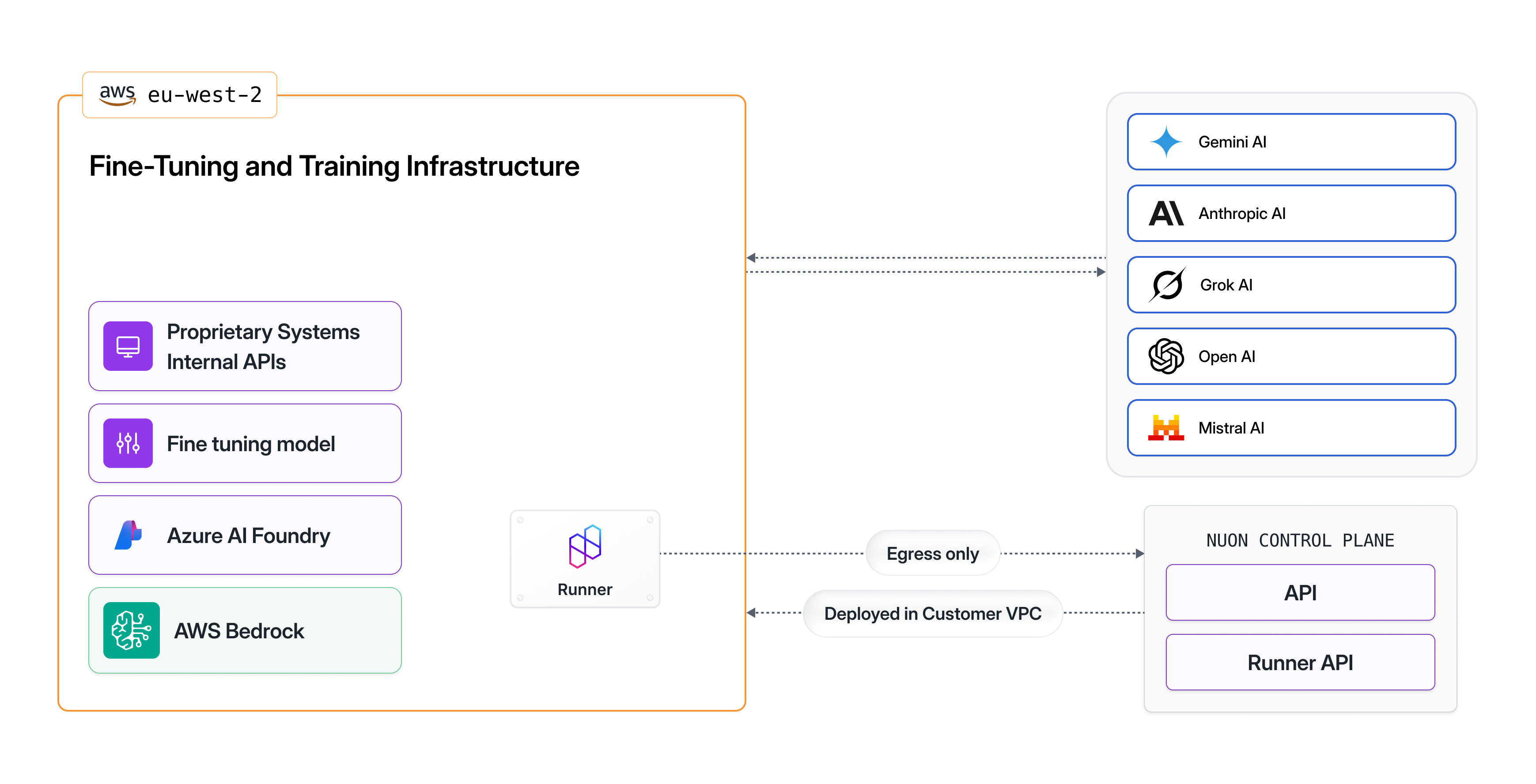

Fine-Tuning & Training Infrastructure

Deploy fine-tuning pipelines in customer clouds where proprietary training data lives. Preprocess sensitive datasets locally before sending to AI provider APIs, meeting compliance requirements.

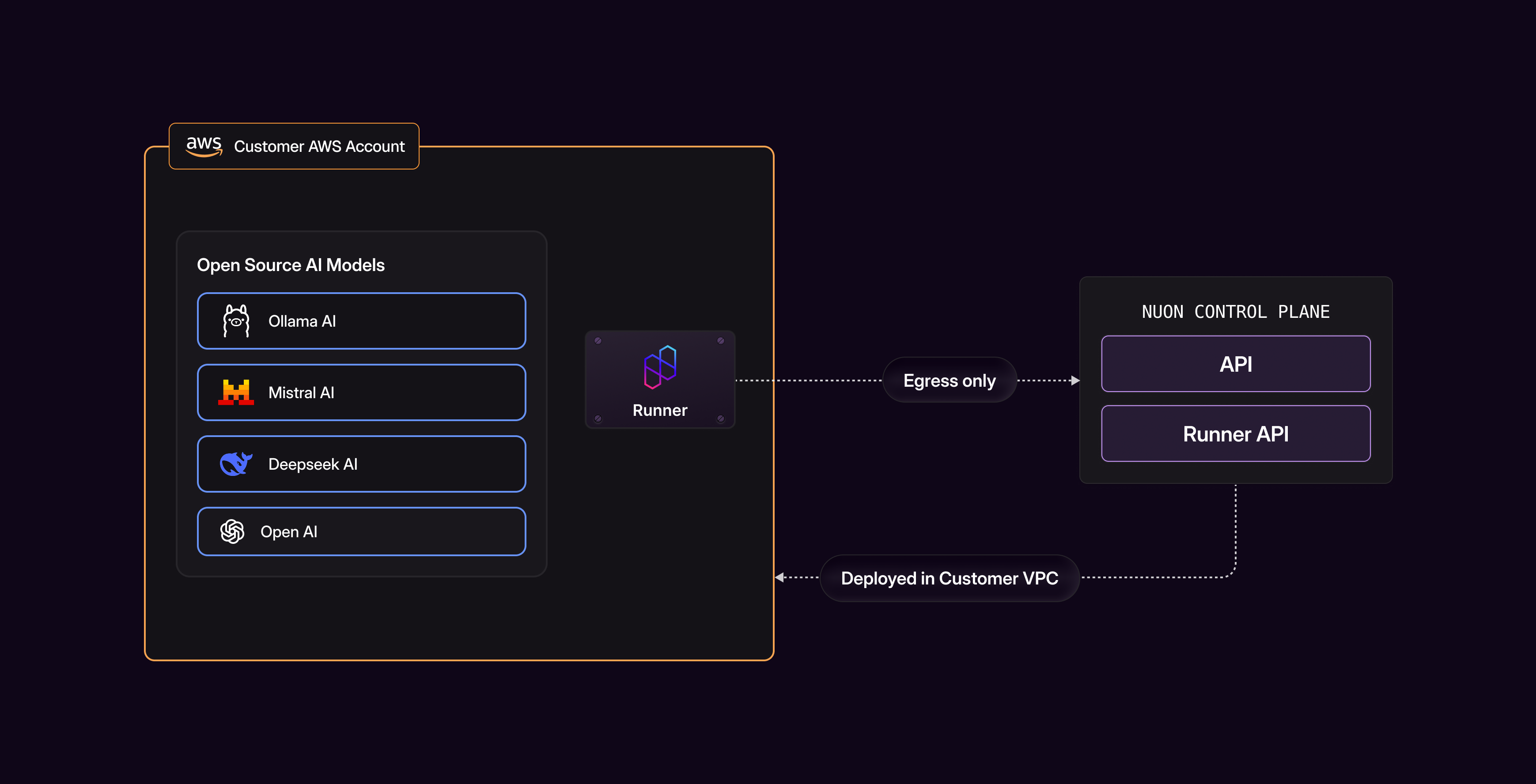

Self-Host LLM Models in Customer Cloud Accounts

Host open-source models (Llama, Mistral) alongside AI applications in customer accounts. Co-locate with AWS Bedrock, Azure OpenAI, or private model infrastructure within one secure environment.

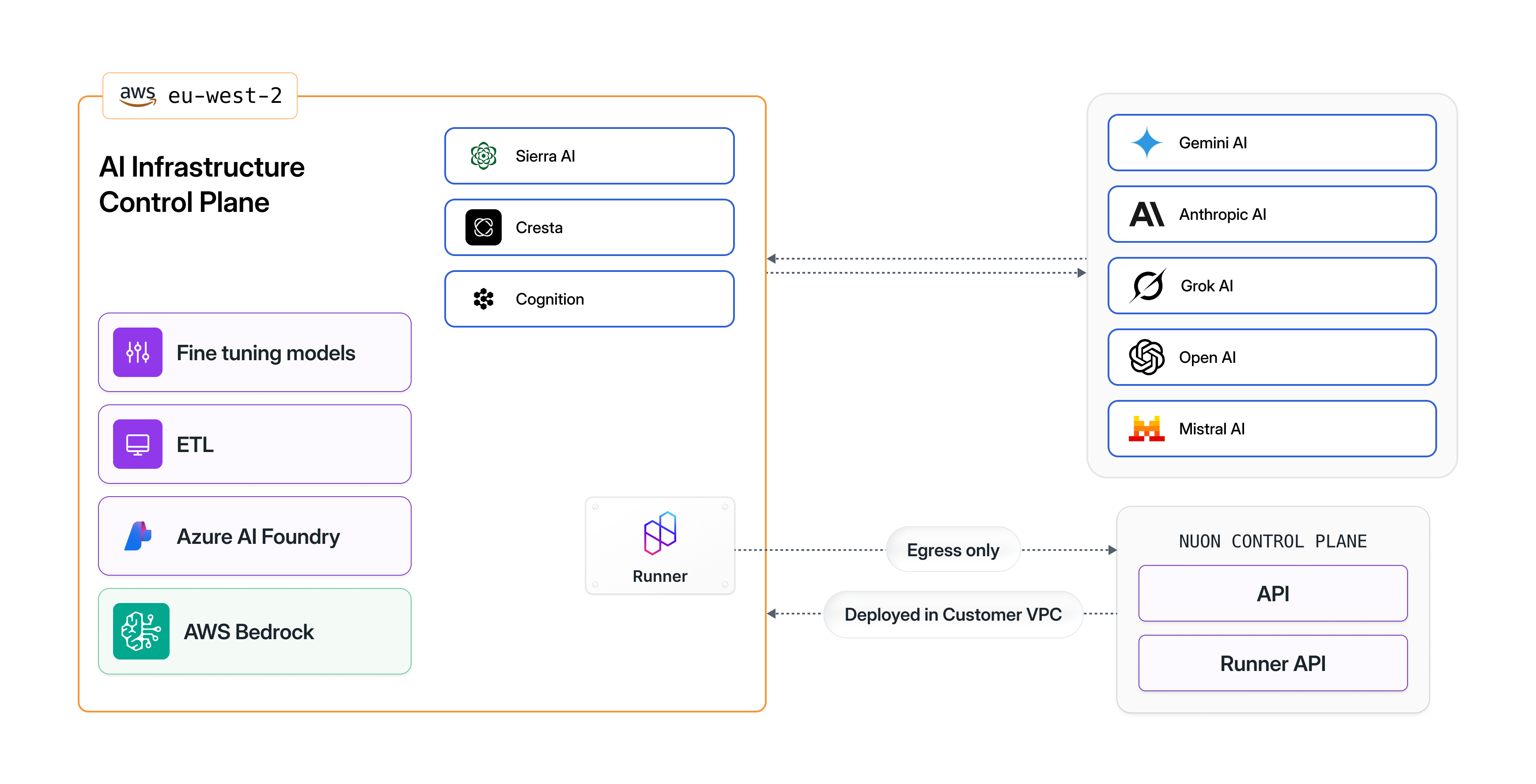

One Control Plane for AI Infrastructure

Deploy AI apps and infrastructure repeatably across customer clouds. Package once, install everywhere, from orchestration layers to RAG pipelines to agent environments.

Offer BYOC for any deployment architecture

Start your Nuon trial and try our example apps in minutes. No credit card required.